Why Do So Many AI Projects Fail?

What if you spent more than half a trillion dollars and got almost nothing in return? IDC estimates that by 2028, corporate spending on AI solutions will climb to $632 billion from just over $300 billion in 2025. Yet, despite this massive investment, many AI projects fail, while those that do survive often produce no meaningful financial benefit.

In August of this year, MIT generated a lot of controversy with its report, The GenAI Divide, which dropped a shocking statistic: “Just 5% of integrated AI pilots are extracting millions in value, while the vast majority remain stuck with no measurable P&L impact.” In other words, 95% of AI projects “fail” in some capacity. Likewise, data from other recent research and analysis tell a similar story:

- RAND found that over 80% of AI projects fail.

- S&P Global says 46% of AI projects are abandoned before production.

- McKinsey reports that over 80% of firms have not achieved bottom-line impact from generative AI.

- Gartner’s 2025 Hype Cycle for Artificial Intelligence notes that less than 30% of leaders report satisfactory returns from their AI investments.

However, the consensus across the research used for this article is that these failures aren’t a problem with the technology, but a fundamental misunderstanding of what AI is, what it can do, and what it takes to make it succeed.

The “Vehicle Problem”: We Don’t Even Know What We’re Talking About

One of the biggest hurdles in AI is the ambiguity of the word itself. In their book AI Snake Oil, Princeton researchers Arvind Narayanan and Sayash Kapoor draw a comparison to an imaginary world where the word “vehicle” is used to describe everything from a bicycle to a rocket ship.

“Conversations in this world are confusing. There are furious debates about whether or not vehicles are environmentally friendly, even though no one realizes that one side of the debate is talking about bikes and the other side is talking about trucks.”

In fact, even the research is often vague about what “AI” means and a large share of the survey results presented in this article focus specifically on generative AI. As a result, “AI” has become synonymous with generative AI tools like ChatGPT for many people, creating the impression that this type of AI can solve any business problem. However, other types of AI, such as predictive AI, computer vision, and expert systems are often better suited to specific organizational challenges than generative AI. For instance, the generative AI that writes marketing copy is very different from the predictive AI used to forecast equipment failures.

Hype vs. Reality

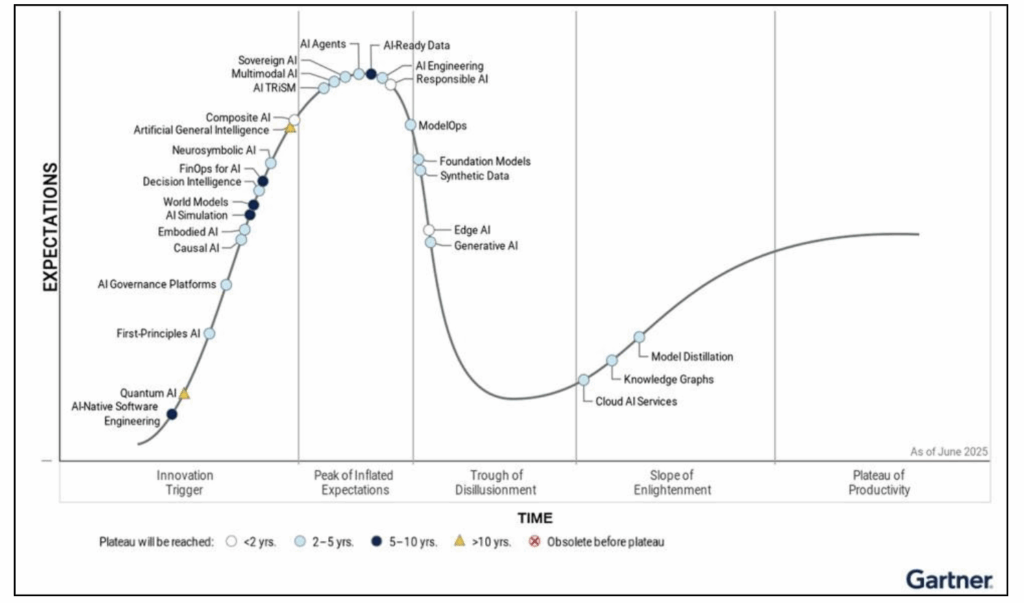

The “Vehicle Problem” highlights the confusion surrounding any emerging technology, which leads to a disconnect between a new technology’s promise and its business reality. Gartner’s Hype Cycle provides a popular framework that’s used to visualize the path that technology products travel from their initial conception to their eventual (although not always) commercial viability and mainstream adoption.

Gartner Hype Cycle for Artificial Intelligence 2025

The Five Phases of the Hype Cycle

The Innovation Trigger phase is reserved for the truly novel next-generation AI concepts that are still primarily in research labs. This phase generates excitement and high expectations among early adopters and is characterized by high-risk, early proof-of-concept stories and prototypes.

Hype is greatest during the Peak of Inflated Expectations, when there is intense media attention, as well as widespread public excitement and high expectations for a new technology. However, hype isn’t always bad because it generates the interest and awareness that’s necessary to foster innovation. But, as the name suggests, it also leads to unrealistic expectations, overinvestment, and short-term thinking. This phase sees some success stories, but a lot more failure.

The next phase is the Trough of Disillusionment, or the reality check phase. This is where the excitement wears off as many implementations fail to deliver on their initial expectations. The companies that progress from here are the ones that build the operations that make results repeatable.

The Slope of Enlightenment follows, where the real-world benefits of the technology become more widely understood and implemented. Finally, technologies arrive at the Plateau of Productivity, where returns are predictable and mainstream adoption takes hold.

The Gartner Hype Cycle helps to explain why so many AI projects fail as they move through the life cycle phases, especially during the transition from the Peak of Inflated Expectations into the Trough of Disillusionment.

The Top Reasons for AI Failure

Across the research, we found the most common causes of AI deployment failures can be attributed to at least one of these business domains: leadership, data readiness, and engineering/infrastructure.

- Leadership-Driven Failures

The single biggest driver of AI failure, cited by nearly all (84%) of the AI practitioners interviewed in the RAND study is a lack of clear leadership and strategy. At the Peak, the pressure to “do something with AI” is immense. Executives launch initiatives without a clear strategy or an understanding of the business problems that AI can actually solve.

The end result is a model that provides no business value because it can’t solve the problem, it solves the wrong problem, or it optimizes the wrong metric. Likewise, the MIT study found that leaders often focus on the wrong priorities, chasing visible wins in sales and marketing, while the high-ROI, back-office automation opportunities that deliver true process-level transformation are ignored.

Another major issue is the underestimation of the time, cost, and complexity involved with data preparation, model training, and integration. According to McKinsey’s State of AI report, the management activity that has the most significant impact on an organization’s bottom line when deploying AI projects is tracking well-defined Key Performance Indicators (KPIs), yet fewer than one in five are tracking these metrics.

Perhaps this is because, as OECD’s cross-country report on AI adoption in enterprises found, many managers have a “plug-and-play conception of adoption, expecting AI to be a commodity technology they can easily integrate into core business processes.” This disconnect creates what the MIT report calls a “pilot-to-production chasm,” where enthusiasm gets pilots started, but a lack of strategic patience and focus prevents them from being integrated into core workflows.

Leaders don’t need to be AI experts, but they must understand that successful AI implementation requires a top-down vision, a clear roadmap, and well-defined KPIs. Leaders should work closely with technical teams to define an explicit, enduring business problem and be ready to redesign their workflows and processes around AI.

- Data-Driven Failures

After leadership failures, data is the next biggest culprit in disappointing AI deployments. According to the RAND report, much of the problem lies with poor data quality. Organizations often lack sufficient, fit-for-purpose data and often rely on misaligned legacy datasets collected for compliance or basic record keeping that don’t have the context needed to train an effective model.

The OECD report adds that many businesses have not historically collected the right information and what data they do have is often siloed, fragmented, or stored in incompatible formats. This issue is often exacerbated by managers who don’t understand what data is needed in the first place, and are afraid of sharing critical data with AI systems due to competitive or legal concerns.

Gartner reports that 63% of organizations lack suitable data practices and predicts that 60% of AI projects will be abandoned through 2026 simply because they are not supported by AI-ready data. Data problems usually surface in the Trough. This is why Gartner puts so much weight on evolving data management for AI as part of climbing the Slope of Enlightenment in their AI Hype Cycle. To succeed, a project must be supported by a dataset that is a complete and faithful representation of the specific use case, complete with every pattern, error, and outlier needed to properly train and run the model.

- Engineering & Infrastructure Failures

Even with strong leadership and good data, AI projects can still fail due to poor technical implementation and inadequate infrastructure, which are generally exposed during the Trough phase.

Internal engineering teams often lack the capability to build adaptive systems, which the MIT report calls the “learning gap.” This is the absence of a persistent memory that allows a system to absorb feedback, remember user preferences, and improve over time. Until enterprise tools close that gap, the report argues that user trust and core workflow impact will be limited.

In addition to the learning gap, a shortage of specialized skills is also a persistent challenge, cited across many of the reports. The deficit goes beyond just data scientists to include machine learning (ML) engineers, who are needed to deploy and maintain models, and data engineers, who build the data pipelines. Without the talent to turn theoretical models into production-ready systems, project remain stuck in the proof-of-concept stage.

Finally, underinvestment in infrastructure is another major roadblock to successful AI projects. The RAND report notes that organizations consistently fail to invest in the operational infrastructure (MLOps) needed to deploy, monitor, and maintain AI models in production. S&P Global’s report on AI infrastructure reinforces this, finding that 37% of organizations feel their infrastructure limitations prevent them from keeping pace with AI advancements. The cost of this infrastructure is also a major factor, with the same report noting that budget has become the number one driver of project failure, cited by 35% of respondents. The specialized hardware required, such as GPUs and high-performance storage, is expensive and can strain IT budgets, leading to projects being abandoned before they deliver value.

Beyond the Technology: Building the Foundation for Success

Technology is rarely the bottleneck to successful AI deployment on its own. The research says the fix is mostly organizational and operational: leadership that owns a business KPI and a roadmap, data that’s fit for the job and governed, and engineering/infrastructure that make reliability and cost visible every day. By moving beyond the hype, understanding the different “vehicles” of AI, and committing to the hard work of organizational change, companies move onto the Slope of Enlightenment where leaders can begin to unlock the transformative potential they’ve been promised with AI.

Lead by Learning: “Introduction to AI for Managers”

IntelliGenesis’s IntelliCademy™ training division developed Introduction to AI for Managers to guide leaders through the persistent challenges of AI and toward successful deployments. This executive-level course focuses on demystifying AI for non-technical decision-makers, covering the very topics that lead to project failure: understanding AI fundamentals, establishing governance, aligning projects with mission needs, and differentiating between hype and realistic capabilities.