AI Demystified: What is a Neural Network?

What if we could replicate the brain’s capacity for pattern recognition, problem-solving, and decision making, and put it to work in a machine that can learn and improve over time?

Neural networks are that mechanism—modeled after the interconnected structure and reasoning function of the human brain. Just as the brain learns by engaging with the world through sensory input, neural networks learn by experiencing the world through data and algorithms.

The Role of A Neural Network in Artificial Intelligence

Neural networks are a key part of many AI technologies, like voice assistants and facial recognition. The diagram below shows how they fit into the overall AI hierarchy, with machine learning being a subset of AI, deep learning being a subset of machine learning, and a neural network being a specific machine learning technique.

Source: AI vs. Machine Learning vs. Deep Learning: Know the Differences

In a nutshell, all neural networks are machine learning, but not all machine learning involves neural networks. Similarly, all deep learning models rely on neural networks, but not all neural networks are deep learning models. The distinction between deep learning and shallow neural networks lies in the complexity and architecture of the network, with deep learning models typically featuring multiple layers and sophisticated designs.

Breaking Down the Structure of A Neural Network

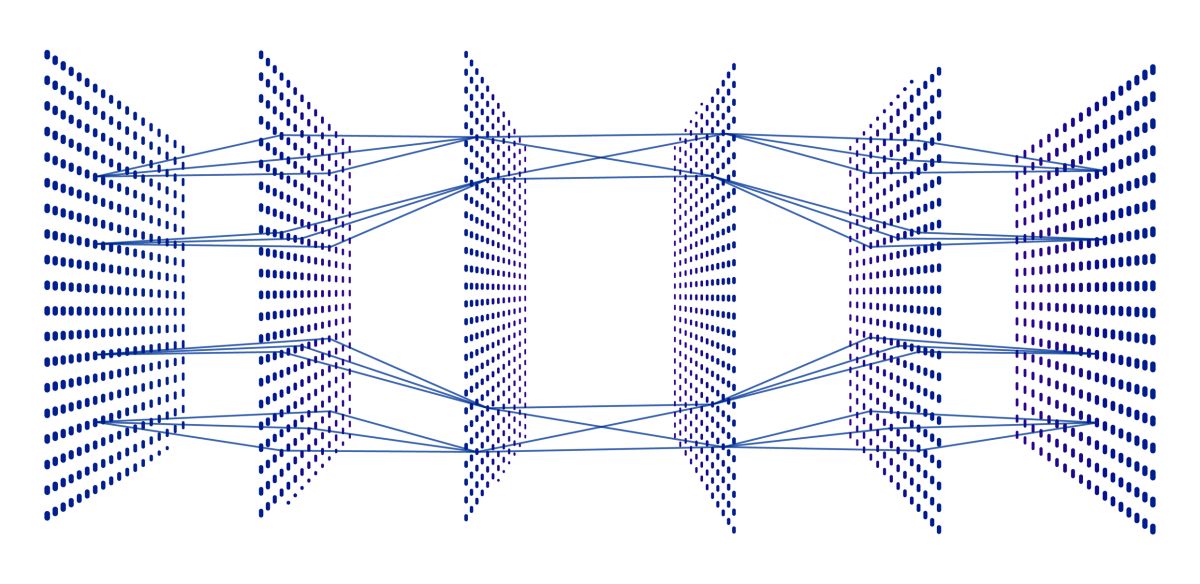

A typical neural network is organized into layers of artificial neurons, or nodes. Each neuron can be thought of as an independent computational unit, which collects data from other neurons, processes it, and then produces a result.

The layers include an input layer, one or more hidden layers, and an output layer. Each neuron in a layer is connected to neurons in the next layer through weighted connections. The weight, which is a number that establishes the strength of the connection, determines how much influence one neuron has over another.

Source: What is a Neural Network and how do they work?

How Neural Networks Learn

When the network is first set up, connection weights and bias are assigned random values, so its performance is poor at the outset.

During training, the network is given data with known outputs for every input. This is called supervised learning, as opposed to unsupervised learning, where the network isn’t given the correct output and has to learn on its own.

Source: Unboxing Weights, Biases, Loss: Hone in on Deep Learning

Each neuron processes this data by multiplying it by a weight and adding a bias. The bias value helps the neuron learn more complex relationships between the inputs and outputs.

The result is then passed through an activation function, which determines if the output of the neuron is important to the prediction process. If the result of the activation function meets a certain threshold, the neuron “activates” and sends its output to the neurons in the next layer.

The activation function also introduces non-linearity into the process. Without this, neural networks would be limited to simple linear calculations, limiting the network’s ability to learn because real-world data is typically complex and non-linear.

This process repeats until the data reaches the output layer, where the final decision or prediction is made. The predictions are then compared to the correct answers. If the predictions are wrong, the network adjusts its weights and biases to reduce the error between the expected and actual results.

Gradient Descent and Backpropagation

Gradient descent is the method used to tweak the network’s weights to reduce this error, which is also known as the loss function or cost function. The goal of gradient descent is to improve a network’s predictive performance by iteratively minimizing the loss function to get closer to the correct output.

To do this, the error is traced back through the network—from the output layer, through the hidden layers, and to the input layer—to see where things went wrong. Each neuron is then told how much it contributed to the overall error, allowing it to adjust its connection weight. This is called backpropagation and it represents a significant advancement in neural network training.

Neural Network Architectures

There are endless types of neural networks, with researchers continually developing new architectures. These are a few of the more common types:

- Convolutional Neural Networks (CNNs): CNNs can detect and learn from patterns within visual data, making them ideal for tasks like facial recognition or object detection.

- Recurrent Neural Networks (RNNs): RNNs have loops that allow them to remember previous inputs. This makes them well-suited for tasks that involve sequential data, like time series prediction or natural language processing.

- Generative Adversarial Networks (GANs): GANs consist of two networks—a generator and a discriminator that compete with each other to create new data that looks like the original. They are often used in image generation, data augmentation, and other applications where generating new data is necessary.

Source: The Neural Network Zoo

Each of these diverse architecture types has its own strengths, weaknesses, and ideal use cases. Therefore, it is often beneficial to combine multiple neural networks to leverage each model’s strengths to improve performance compared to using a single neural network. It can also improve the robustness of the model by averaging out individual network errors and biases.

The Power of Neural Networks

Part of the magic of neural networks lies in the fact that the neurons work in parallel. Unlike traditional computer architectures, which handle tasks one at a time, each neuron within a layer of a neural network performs separate calculations simultaneously with all other neurons in the same layer. This allows the network to process vast amount of information at once.

As the network is exposed to more data during training, it gradually learns abstract and hierarchical relationships in the data that allow it to make accurate predictions or classifications that often exceed the capabilities of rule-based algorithms.

This computational ability, combined with optimization techniques like gradient descent and backpropagation, is what makes neural networks a powerful tool in the field of artificial intelligence and so effective at tasks that involve complex patterns and relationships.

Neural Networks in the Real World

A delightful example of this technology in action is BakeryScan, an early image recognition technology developed in 2007 by a company called BRAIN to identify pastries at a Japanese bakery. Fast-forward to 2017, when a doctor saw a TV spot for BakeryScan and realized that cancer cells looked a lot like bread under a microscope. This led to a collaboration with BRAIN to utilize the BakeryScan technology for cancer cell detection. Today, that adapted technology, called Cyto-AiSCAN, can identify cancer cells with 99% accuracy.

The ability to repurpose AI-based technologies like neural networks that were initially trained on one dataset and then successfully apply them to entirely new applications demonstrates the true power of these advanced technologies.